Introduction to Wan 2.1 and How to Use WanVideo to Create Magic Video

Table of Contents

- What is Wan 2.1?

- Key Features of Wan 2.1

- How WanVideo Works

- Getting Started with WanVideo

- Text-to-Video Creation Guide

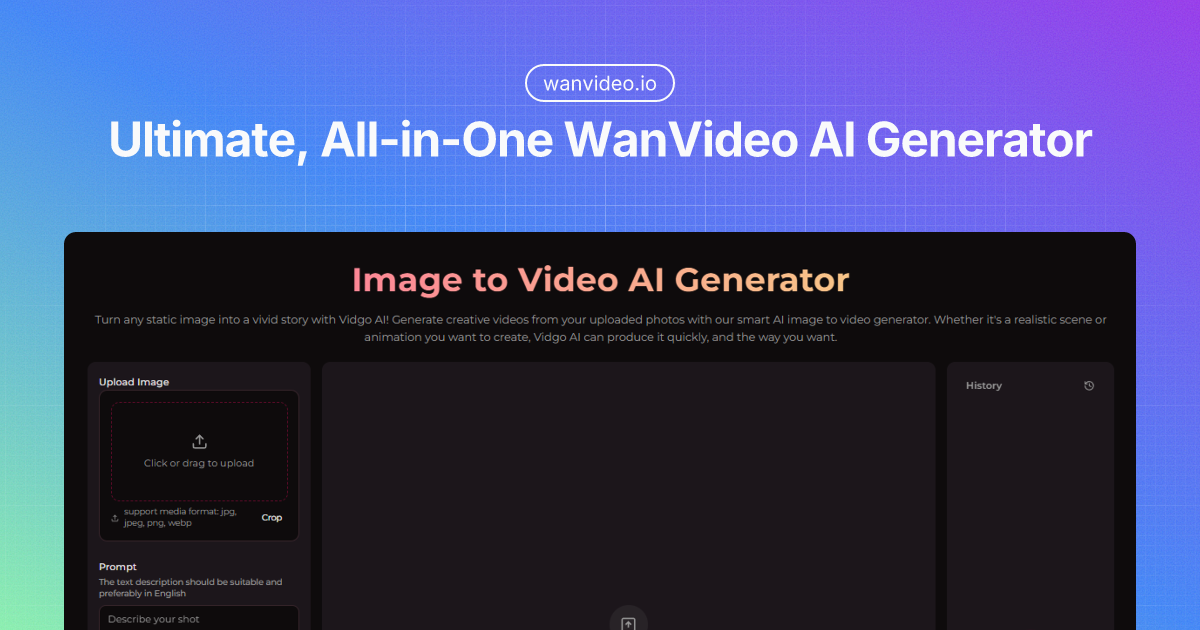

- Image-to-Video Transformation

- Advanced Tips for Better Results

- Technical Specifications

- Comparing Wan 2.1 with Other Video AI Models

- Future of AI Video Generation

- Conclusion

What is Wan 2.1?

Wan 2.1 is a groundbreaking AI video generation model developed by Alibaba's Tongyi Lab. Released as an open-source suite of video foundation models, Wan 2.1 represents a significant leap forward in making high-quality video generation accessible to everyone. This powerful AI system can transform simple text prompts or static images into dynamic, fluid videos with remarkable quality and realism.

As one of the most advanced open-source video generators available today, Wan 2.1 has quickly gained popularity among creators, developers, and AI enthusiasts. What makes it particularly special is its ability to run on consumer-grade hardware while still producing professional-quality results.

The WanVideo Official Site serves as the primary platform for accessing these powerful tools, offering both free and premium options for different user needs. Whether you're a content creator looking to enhance your videos, a developer integrating video generation into applications, or simply an enthusiast exploring AI capabilities, Wan 2.1 provides an accessible entry point into the world of AI video creation.

Key Features of Wan 2.1

Wan 2.1 stands out in the crowded field of AI video generators thanks to several impressive capabilities:

Multiple Generation Methods

- Text-to-Video (T2V): Transform written descriptions into fully animated videos

- Image-to-Video (I2V): Bring static images to life with natural motion

- Video Editing: Enhance or modify existing video content

- Text-to-Image: Generate still images from textual descriptions

- Video-to-Audio: Add complementary audio to video content

Technical Advantages

- High-Quality Output: Creates videos with smooth movements and realistic physics

- Efficiency: The 1.3B parameter model requires only 8.19GB VRAM, making it accessible on consumer GPUs

- Multilingual Support: Works with both English and Chinese inputs

- Open-Source Architecture: Available for academic, research, and commercial use

Performance Benchmarks

Wan 2.1 has topped the VBench leaderboard, a comprehensive benchmark for video generation models, scoring particularly well in areas like movement quality, spatial relationships, and multi-object interactions. This places it among the most capable video generation systems currently available, competing favorably with proprietary models like OpenAI's Sora.

How WanVideo Works

The magic behind WanVideo lies in its sophisticated AI architecture. At its core, Wan 2.1 utilizes several advanced components:

- 3D Variational Autoencoder (Wan-VAE): Compresses and decompresses video data efficiently

- Video Diffusion DiT: Generates high-quality video frames

- Flow Matching Framework: Ensures smooth transitions between frames

- T5 Encoder: Processes text inputs for accurate representation

- Transformer Blocks with Cross-Attention: Connects textual concepts with visual elements

This complex system works together seamlessly to interpret your input (whether text or image) and generate a cohesive video output that accurately represents the intended content. The process happens in several stages:

- Input processing (text encoding or image analysis)

- Content planning and scene composition

- Frame-by-frame generation with temporal consistency

- Post-processing for enhanced quality and coherence

The result is a video that not only looks good in individual frames but maintains continuity and logical movement throughout its duration.

Getting Started with WanVideo

Getting started with WanVideo is straightforward, even for beginners. Here's how to begin your AI video creation journey:

Step 1: Choose Your Creation Method

WanVideo offers two main creation methods:

- Text-to-Video (T2V): Transform written descriptions into fully animated videos

- Image-to-Video (I2V): Bring static images to life with natural motion

Each method has its own advantages. Text-to-video offers maximum creative freedom, while image-to-video gives you more control over the visual style and content.

Step 2: Create an Account

While WanVideo offers some free generation capabilities, creating an account will give you access to:

- Higher resolution outputs

- Longer video durations

- Advanced editing features

- Saved projects and history

- Download videos without watermark

The registration process is simple and requires just an email address to get started.

Step 3: Select a Template

WanVideo provides various templates to help you get started:

- Browse through the available templates

- Select a template that matches your creative vision

- Some templates are effect-based and come with pre-defined prompts

- Others allow you to customize your own prompt

Step 4: Prepare Your Content

For Image-to-Video:

- Upload one or two images

- Single image: Upload one image for direct conversion

- Two images: Upload two images to create a side-by-side comparison

- Use the built-in cropping tool to adjust your images

- Adjust zoom level

- Modify aspect ratio

- Preview the final result

- Wait for the upload to complete

For Text-to-Video:

- Enter your prompt in the text area

- Be specific about the scene, movement, and style

- Use the copy and clear buttons to manage your prompt

Step 5: Generate Your Video

- Click the "Generate Video" button

- Complete the verification process

- Wait for the generation to complete (typically a few minutes)

- The video will appear in the results section

Step 6: Download and Share

Once your video is generated, you can:

- Preview the video directly in the browser

- Download the video with watermark (free)

- Download the video without watermark (premium feature)

- View detailed information about your generation

- Access your generation history

Step 7: Manage Your History

WanVideo keeps track of all your generations:

- Access your history panel on the right side (desktop) or bottom sheet (mobile)

- View previous generations

- Re-download videos

- Check generation details

- Monitor your credit usage

Tips for Best Results

- Use high-quality images for better results

- Be specific in your text prompts

- Experiment with different templates

- Check your credit balance before generation

- Use the cropping tool to ensure proper aspect ratio

- Consider using two images for comparison videos

Text-to-Video Creation Guide

The Text to Video feature is perhaps the most magical aspect of WanVideo, allowing you to manifest your imagination with just words. Here's how to get the best results:

Crafting Effective Prompts

The quality of your text prompt directly influences the quality of your video. Follow these guidelines:

-

Be Specific: "A red sports car driving fast on a coastal highway at sunset" works better than "a car driving"

-

Include Visual Details: Mention colors, lighting, weather, and atmosphere

-

Describe Movement: Specify how objects should move ("swaying gently," "racing quickly")

-

Set the Scene: Include background elements and environment details

-

Consider Style: Add artistic direction like "photorealistic," "anime style," or "cinematic"

Sample Prompt Template

[Subject] [action] in/on [location] with [details] during [time of day], [style reference]

Example: "A majestic eagle soaring over snow-capped mountains with sunlight glinting off its wings during golden hour, cinematic quality"

Adjusting Parameters

WanVideo allows you to fine-tune several generation parameters:

- Video Length: Typically 5-10 seconds (longer videos may lose coherence)

- Resolution: 480p is standard, with 720p available for premium users

- Guidance Scale: Controls how closely the AI follows your prompt (higher values = more literal interpretation)

- Seed: Save this number to recreate similar videos in the future

Iterative Refinement

Don't expect perfect results on your first try. The best approach is iterative:

- Start with a basic prompt

- Review the generated video

- Refine your prompt based on what worked and what didn't

- Generate again

- Repeat until satisfied

Image-to-Video Transformation

The Image to Video feature allows you to animate static images, bringing photographs, illustrations, or AI-generated images to life. Here's how to use it effectively:

Choosing the Right Base Image

Not all images are equally suitable for animation. The best candidates have:

- Clear subjects with defined boundaries

- Some implied motion potential

- Good composition with foreground and background elements

- High resolution and quality

Avoid images that are already blurry, have multiple overlapping subjects, or extremely complex scenes.

Setting Motion Parameters

WanVideo gives you control over how your image animates:

- Motion Strength: Determines how dramatic the movement will be

- Motion Direction: Guides the primary direction of movement

- Focus Point: Indicates which part of the image should be the center of animation

- Duration: Sets how long the resulting video will be

Adding Supplementary Text

You can enhance your image-to-video conversion by adding descriptive text:

- Upload your image

- Add a text description of the desired motion and effects

- Adjust parameters as needed

- Generate your video

This combination of visual and textual input often produces the most impressive results.

Post-Processing Options

After generating your video, WanVideo offers several post-processing options:

- Adjusting playback speed

- Adding transitions

- Applying filters

- Incorporating text overlays

- Adding background music or sound effects

These finishing touches can elevate your creation from impressive to professional.

Advanced Tips for Better Results

Once you're comfortable with the basics, try these advanced techniques to take your WanVideo creations to the next level:

Prompt Engineering

- Use negative prompts to specify what you don't want to see

- Incorporate weight values to emphasize certain elements (beautiful::0.8, detailed::1.2)

- Chain multiple prompts with transitions for more complex narratives

Technical Optimizations

- For local installations, use half-precision (fp16) to reduce VRAM usage

- Batch similar videos together for more efficient processing

- Use the "ancestral sampling" option for more creative (though less prompt-faithful) results

Creative Workflows

- Create a storyboard sequence by generating multiple short clips and combining them

- Use image-to-video for establishing shots, then text-to-video for action sequences

- Combine WanVideo with other AI tools for complete production pipelines

Common Issues and Solutions

| Problem | Solution |

|---|---|

| Video lacks coherent motion | Specify movement direction more clearly in prompt |

| Poor subject recognition | Use more specific descriptions of key elements |

| Temporal inconsistency | Reduce video duration or simplify the scene |

| Artifacts or glitches | Try a different seed or reduce complexity |

| Low resolution | Upgrade to premium tier or use upscaling tools |

Technical Specifications

For those interested in the technical details, here's what powers Wan 2.1:

Model Architecture

Wan 2.1 comes in two primary sizes:

- 1.3B Parameter Model: Lightweight version that runs on consumer hardware

- 14B Parameter Model: Full-sized version for professional applications

The architecture includes:

- Dimension: 1536

- Input Dimension: 16

- Output Dimension: 16

- Feedforward Dimension: 8960

- Frequency Dimension: 256

- Number of Heads: 12

- Number of Layers: 30

For more detailed technical specifications, you can refer to the official model card on Hugging Face and the Replicate documentation.

Hardware Requirements

For the 1.3B model:

- Minimum 8.19GB VRAM

- Compatible with RTX 3090/4090 series GPUs

- Generation time: ~4 minutes for 5-second video (without optimization)

For the 14B model:

- Recommended 24GB+ VRAM

- Professional-grade GPUs recommended

- Generation time: Varies based on hardware

For detailed hardware compatibility and optimization guides, check out the ComfyUI Wiki and community discussions on Reddit.

Software Dependencies

If installing locally:

- Python 3.8+

- PyTorch 2.0+

- CUDA 11.7+ (for GPU acceleration)

- FFmpeg (for video processing)

For installation guides and troubleshooting, visit the GitHub repository and Alibaba Cloud's official documentation.

Comparing Wan 2.1 with Other Video AI Models

How does Wan 2.1 stack up against other popular video generation models?

Wan 2.1 vs. Proprietary Models

| Feature | Wan 2.1 | OpenAI's Sora | Runway Gen-2 |

|---|---|---|---|

| Accessibility | Open-source | Limited access | Subscription-based |

| Cost | Free/Low-cost | Not publicly priced | $15-$95/month |

| Video Length | 5-10 seconds | Up to 60 seconds | Up to 16 seconds |

| Resolution | Up to 720p | Up to 1080p | Up to 1080p |

| Hardware Req. | Consumer GPUs | Cloud-only | Cloud-only |

| Customization | High | Limited | Medium |

Performance Comparison

Wan 2.1 excels in:

- Movement quality and physics

- Running locally on consumer hardware

- Open-source flexibility and customization

Areas where other models may have advantages:

- Longer video generation (Sora)

- Higher resolution output (commercial models)

- Better handling of human faces and complex interactions (specialized models)

The open-source nature of Wan 2.1 means it's continuously improving as the community contributes enhancements and optimizations.

Future of AI Video Generation

The release of Wan 2.1 represents an important milestone in democratizing AI video generation, but this is just the beginning. Here's what we might expect in the near future:

Upcoming Developments

- Longer Videos: Future iterations will likely extend beyond the current 5-10 second limitation

- Higher Resolutions: Expect 1080p and even 4K capabilities as models become more efficient

- Better Temporal Consistency: Improved handling of complex movements and scene changes

- Multimodal Integration: Combining video, audio, and interactive elements seamlessly

- Specialized Models: Versions optimized for specific use cases like product demonstrations or nature scenes

Potential Applications

As AI video generation becomes more accessible and capable, we'll see it transforming numerous industries:

- Content Creation: Enabling small creators to produce professional-quality videos

- E-commerce: Dynamic product demonstrations from static catalog images

- Education: Visualizing complex concepts through animation

- Gaming: Generating game assets and cinematics

- Virtual Reality: Creating immersive environments on demand

Conclusion

Wan 2.1 and the WanVideo platform represent a significant democratization of video generation technology. By making powerful AI video creation accessible to everyone—from hobbyists to professionals—Alibaba's Tongyi Lab has opened new creative possibilities that were previously available only to those with extensive resources.

Whether you're looking to create stunning text-to-video content, bring your static images to life with image-to-video transformation, or explore the cutting edge of AI creativity, Wan 2.1 provides a powerful and accessible entry point.

As with any emerging technology, the most exciting applications are likely those we haven't even imagined yet. The open-source nature of Wan 2.1 ensures that innovation will continue at a rapid pace, with contributions from developers and creators worldwide pushing the boundaries of what's possible.

The future of video creation is here—and it's more accessible than ever. Why not visit the WanVideo Official Site today and start creating your own AI-powered videos? Your imagination is the only limit.